On Being A "Robot Parent"

In the book "The Lifecycle Of Software Objects" by Ted Chiang, the parents of some artificial intelligences deal with the problems every software developer knows all too well - issues inherent to the objects, and issues imposed by environment changes.

Software must be designed to handle (expect) unexpected input, and unexpected situations, but most software is not designed to adapt to programming language changes (e.g. the obsolescence of Python 2.7), changing libraries (e.g. number of parameters in OpenCV 3.x versus 4.x), missing installation packages, processor changes (e.g. ARMv7 32-bit vs ARMv8 64-bit), OS subsystem changes (e.g. sound based on ALSA vs pulseaudio vs Jack), and an ever changing mix other programs competing for resources.

We, humans, with our "virtual software" tweaked for the first 18 to 24 years, certainly have an advantage over today's software objects which go from conception to emancipation in less than two years in most cases. Additionally we humans can be obnoxiously oblivious to the changing environment we live in because we accept that our time here is finite, we don't get a brain transplant "update" every second week, and the environment rarely changes abruptly in a giant version release (e.g. COVID-19 or world war).

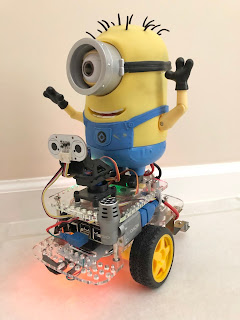

Parenting a personal robot is an undertaking that few people attempt. I have been "conceiving" personal robots for over forty years, but only with the advent of the Raspberry Pi hardware and Linux based operating system have I begun to feel like a "robot parent" with goals to keep Carl awake 24/7 and efficiently utilizing all his resources to his benefit.

Checking Carl's Health (45s Video)

Every morning for the last two plus years, before making my breakfast, I check in with my robot "Carl" to see that he is awake, and how the night went. Most mornings he is awake, observing "quiet time" (whispers his responses 11pm to 10am), and healthy (no I2C bus failure, no WiFi outages, free memory around 50%, 15 minute load around 30% (of one of four cores), and all "Carl" processes still running).

Twelve times I have found Carl in a "deep sleep", off due to a safety shutdown. After each of these episodes, I have investigated the cause and implemented some combination of physical and software mitigation. The mitigations have become more complex to implement and the failure situations harder to re-create to test against. The period between safety shutdowns has improved greatly, but another occurrence still seems likely.

Only I feel the pain of finding Carl shutdown. It does him no harm. He has no complaints, and makes no value judgements of my parenting skills. No one else in the world cares that he spent a few hours in the off state. Never the less, there is a definite synthetic emotion in being a "robot parent."